In this blog post, you’ll learn how you can run scraping jobs using Selenium from Google Cloud Run, a fully managed solution that eliminates the burden of configuring and managing a Kubernetes cluster. It simplifies your production pipeline and fully automates your scraping process.

Take notice, I’m not an expert in Google Cloud Run. The downside is that there might be a more optimal way to do all this. The upside is that I know what your questions and frustrations are.

To fully understand this blog post, you shoud know:

- Basics of GCP

- Basics of Docker

- Python

Glossary

I (incorrectly) tend to use some terms interchangably. By adding a glossary, I hope to force myself to use correct terminology.

- An (Docker) image is a set of instructions that is used to execute a (Docker) container.

- A (Docker) container is an instance of an image.

- A (Cloud Run) service is a (Docker) container deployed on Google Cloud Run.

- A deployment is the act of deploying an image as (containerized) service to Google Cloud Run.

- Commands are all run on a local machine, assuming you have gcloud and Docker installed.

- A service account is an account used by an application to make authorized API calls within Google Cloud Platform.

- A header lets the client and the server pass additional information with an HTTP request or response. For example, authentication.

FYI: everything is tested on a Win10 machine.

Structure of this blog post

This blog post consists out of several sections.

- First, I explain how you can deploy Selenium in Google Cloud Run.

- Next, you will find the commands to create a service account and link it to the Selenium service.

- Update the RemoteConnection class in Selenium’s Python API.

- Build your scraping script and test it on your machine (using the remote Selenium service)

- Finally, you’ll learn to deploy the scraping script.

Deploy Selenium

To deploy remote Selenium webdriver to Google Cloud Run, I follow a classic Docker workflow, but feel free to use this Cloud Build tutorial.

- First, pull the standalone Chrome Selenium image from Docker Hub.

- Tag the image with the GCP Container Registry destination (you can also use gcr.io).

- Push the image by referring to the tag we set in the previous step.

docker pull selenium/standalone-chrome docker image tag selenium/standalone-chrome us.gcr.io/<PROJECT_NAME>/<SELENIUM_IMAGE_NAME> docker push us.gcr.io/<PROJECT_NAME>/<SELENIUM_IMAGE_NAME>

Using the following command, we deploy the image that we uploaded to the Cloud Registry as a service to Cloud Run.

In the wizard that follows, you can choose to allow unauthenticated communication with the container. However, I strongly recommend against this for obvious reasons. I have included instructions for authenticated communication with a Selenium container in Cloud Run in the section below.

gcloud run deploy <SELENIUM_SERVICE_NAME> \

--image us.gcr.io/<PROJECT_NAME>/<SELENIUM_IMAGE_NAME> \

--port 4444 \

--memory 2GSome important things to notice.

Make sure you map the port, otherwise you’ll encounter the following error during deployment. This occurs because Cloud Run by default maps ports 8080, which isn’t applicable for Selenium.

Cloud Run error: Container failed to start. Failed to start and then listen on the port defined by the PORT environment variable.Furthermore, you should set the memory adequately. If you set the memory too low, sessions will be terminated and you’ll keep running into the following error.

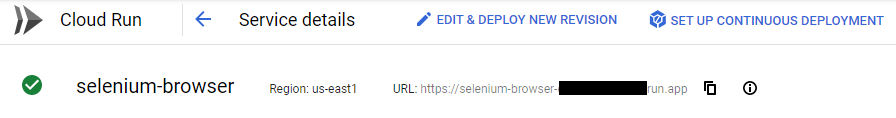

selenium.common.exceptions.WebDriverException: Message: No active session with IDIf your deployment succeeded, you will be able to find the service URL in the Cloud Run section of Google Cloud Platform. You will need this URL to deploy the scraper, where you will refer to it.

Create service account

In this section, I explain how you can set up a service account that the scraping service uses to communicate to the Selenium service.

First, create the service account by running the following command.

gcloud iam service-accounts create <INVOKING_SERVICE_ACCOUNT>

--description "This service accounts invokes Selenium on Google Cloud Run."

--display-name "Selenium Invoker"Next, link the service account you just created to the the Selenium service in Google Cloud Run.

gcloud run services add-iam-policy-binding <SERVICE_NAME> \

--member serviceAccount:<INVOKING_SERVICE_ACCOUNT>@roelpeters-blog.iam.gserviceaccount.com \

--role roles/run.invokerNow, the Selenium service will communication from this service account, and it can be used by the scraping service.

Update Selenium’s RemoteConnection class

There’s bad news: Selenium’s Python API does not accept header-based authentication. In other words, there’s no out-of-the-box way to authenticate via the webdriver.Remote() method and your scraping script can’t communicate in a safe way with the Selenium container.

The good news, Python is an object-oriented programming language, and we can easily overwrite the necessary classes & methods to enable header-based authentication.

In the code below, you can find the RemoteConnectionV2 class, for which I developed the set_remote_connection_authentication_headers method. This method either checks if the IDENTITY_TOKEN environment variable has been set manually. If not, it fetches an identity token via the google.oauth service.

In the get_remote_connection_headers, authentication headers are concatenated to the other headers.

from selenium import webdriver

from selenium import __version__

from selenium.webdriver import ChromeOptions

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

from selenium.webdriver.remote import remote_connection

from time import sleep

from datetime import datetime

from base64 import b64encode

from flask import Flask

import psycopg2

import os

import platform

import google.auth.transport.requests

import google.oauth2.id_token

app = Flask(__name__)

if os.environ.get('SELENIUM_URL') is not None:

selenium_url = os.environ.get('SELENIUM_URL')

else:

raise Exception('No remote Selenium webdriver provided in the environment.')

# Overwriting the RemoteConnection class in order to authenticate with the Selenium Webdriver in Cloud Run.

class RemoteConnectionV2(remote_connection.RemoteConnection):

@classmethod

def set_remote_connection_authentication_headers(self):

# Environment variable: identity token -- this can be set locally for debugging purposes.

if os.environ.get('IDENTITY_TOKEN') is not None:

print('[Authentication] An identity token was found in the environment. Using it.')

identity_token = os.environ.get('IDENTITY_TOKEN')

else:

print('[Authentication] No identity token was found in the environment. Requesting a new one.')

auth_req = google.auth.transport.requests.Request()

identity_token = google.oauth2.id_token.fetch_id_token(auth_req, selenium_url)

self._auth_header = {'Authorization': 'Bearer %s' % identity_token}

@classmethod

def get_remote_connection_headers(self, cls, parsed_url, keep_alive=False):

"""

Get headers for remote request -- an update of Selenium's RemoteConnection to include an Authentication header.

:Args:

- parsed_url - The parsed url

- keep_alive (Boolean) - Is this a keep-alive connection (default: False)

"""

system = platform.system().lower()

if system == "darwin":

system = "mac"

default_headers = {

'Accept': 'application/json',

'Content-Type': 'application/json;charset=UTF-8',

'User-Agent': 'selenium/{} (python {})'.format(__version__, system)

}

headers = {**default_headers, **self._auth_header}

if 'Authorization' not in headers:

if parsed_url.username:

base64string = b64encode('{0.username}:{0.password}'.format(parsed_url).encode())

headers.update({

'Authorization': 'Basic {}'.format(base64string.decode())

})

if keep_alive:

headers.update({

'Connection': 'keep-alive'

})

return headersStructuring the scraping script

Below the code in the previous section, you can write your scraping script. As you can see, a connection is established to Selenium via the RemoteConnectionV2 class. Next, the authentication headers are set, and the webdriver session is initiated.

Below that, you can place the instructions for collecting web data via the webdrive instance (chrome_driver).

@app.route("/")

def scrape():

selenium_connection = RemoteConnectionV2(selenium_url, keep_alive = True)

selenium_connection.set_remote_connection_authentication_headers()

chrome_driver = webdriver.Remote(selenium_connection, DesiredCapabilities.CHROME)

<INSERT_CODE_HERE>

return

if __name__ == "__main__":

app.run(debug=True, host="0.0.0.0", port=int(os.environ.get("PORT", 8080)))Debug Locally

There are two ways that you can debug this. First, you can debug the locally by setting environment variables in your virtual environment and running the script. If you’ve followed all the previous instructions, your Python script will search for SELENIUM_URL and IDENTITY_TOKEN environment variables. This allows you to debug the scraping script locally.

$env:SELENIUM_URL = '<SELENIUM_SERVICE_URL>/wd/hub' $env:IDENTITY_TOKEN = $(gcloud auth print-identity-token)

Debug in a Docker container

Alternatively, you can build a docker image and run a container locally with the necessary environment variables set.

docker build . --tag <LOCAL_IMAGE_NAME>:latest

docker run

-p 8080:8080 \

--env SELENIUM_URL=<SELENIUM_SERVICE_URL>/wd/hub

--env IDENTITY_TOKEN=$(gcloud auth print-identity-token)

<LOCAL_IMAGE_NAME>:latestIf everything works as planned, you can build the Docker image for this particular script. These are the packages that I put in my requirements.txt.

Flask==2.0.1 psycopg2==2.8.6 scipy==1.6.0 selenium==3.141.0 gunicorn==20.1.0 google-auth==1.31.0 requests==2.25.1

And this is my Dockerfile.

# syntax=docker/dockerfile:1 FROM python:3.9.1 # Allow statements and log messages to immediately appear in the Knative logs ENV PYTHONUNBUFFERED True # Copy files WORKDIR /app COPY scrape_social_media.py scrape_social_media.py COPY requirements.txt requirements.txt RUN apt-get update RUN pip install --upgrade pip RUN pip install -r requirements.txt CMD exec gunicorn --bind 0.0.0.0:8080 --workers 1 --threads 8 --timeout 0 scrape_social_media:app

Deploy scraper

In this section, I assume you have built the Docker image for your scrape script. Pushing this image to the GCP Container Registry is completely analogous to pushing the Selenium image.

docker image tag <SCRAPER_IMAGE> us.gcr.io/<PROJECT_NAME>/<SCRAPER_IMAGE_NAME> docker push us.gcr.io/<PROJECT_NAME>/<SCRAPER_IMAGE_NAME>

There are multiple things you should take care of when deploying your scrape script to Cloud Run.

- Provide the URL address of the Selenium service we deployed earlier. This can be done easily by using environment variables with the parameter —set-env-vars …

- Link the required service account that you’ll be using to authenticate with the Selenium service. If you don’t want to have authentication in place, you can remove the –service-account parameter.

gcloud run deploy <SCRAPER_SERVICE_NAME> \

--image us.gcr.io/<PROJECT_NAME>/<SCRAPER_IMAGE_NAME>

--service-account <SERVICE_INVOKING_ACCOUNT>

--set-env-vars SELENIUM_URL=<SELENIUM_SERVICE_URL>/wd/hub

--allow-unauthenticated

--memory 1GYou are now set to automatically scrape the web. A next step would be to set up the scraping job recurrently with GCP Scheduler, Tasks or Workflows.

Hi, please could you elaborate what you mean by mapping the port here: “Make sure you map the port, otherwise you’ll encounter the following error during deployment. This occurs because Cloud Run by default maps ports 8080, which isn’t applicable for Selenium.”?

Hi Alex,

As can be seen in the listed pieces of code. Make sure you map to port 4444, explicitly. Otherwise Cloud Run will map to port 8080 automatically.

Best regards

Roel

Hi Roel,

Thanks for the reply. However, the only time I see the code snippets above use port 4444 is when deploying the selenium service for the first time. All the other code snippets show you using port 8080?

Poor quality article, way too confusing for a beginner. You use variables like without actually giving an example or specifying. Could not get working following your steps, your set up through Google Cloud is non-existent and relies on me knowing what to set up.

Great article. Very helpfull. Was able to reproduce. Any idea how to connect to remote chrome from Java?

Great post, just reproduced it and everything is working fine, thank you!

How would I set the gcloud identity function to an environmental variable in Mac terminal?

Your point of view caught my eye and was very interesting. Thanks. I have a question for you. https://accounts.binance.com/ka-GE/register-person?ref=JHQQKNKN

Who wants free crypto ? They are giving 30k usdt in rewards on Cryptosacro

На данном сайте можно найти информацией о системах видеонаблюдения, разновидностях и ключевых характеристиках. Здесь представлены полезные сведения о подборе оборудования, монтаже и конфигурации.

видеонаблюдение